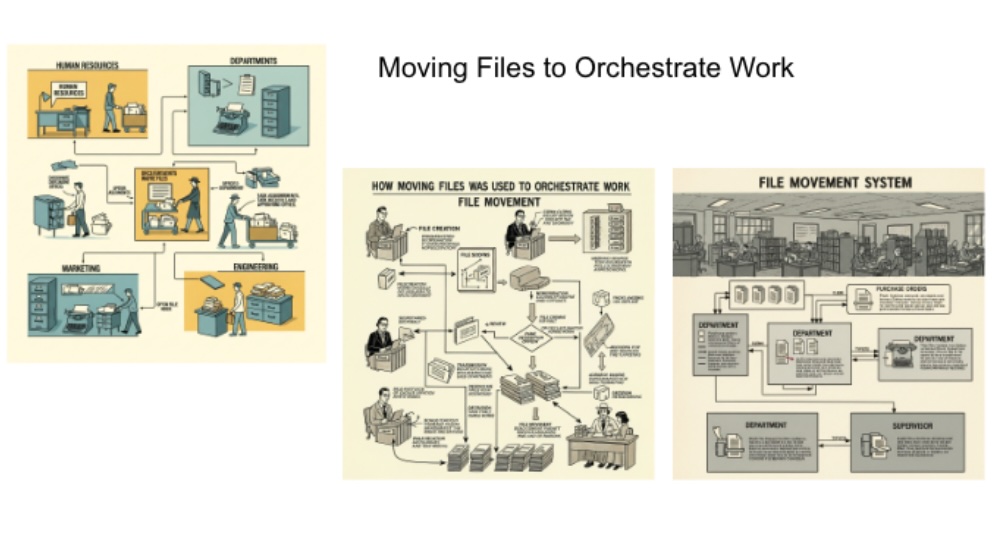

Before the 1960s, much of organizational work revolved around the written document—physical files stored in cabinets, often stacked floor to ceiling. Companies were typically structured along rigid functional lines, and the "file" itself acted as the carrier of work. A request would originate in one department, say sales or procurement, and then physically travel—sometimes literally in a manila folder—from one office to the next. Each department along the chain would add its comments, approvals, or required actions. For example, a purchase request might begin with the operations team, then move to finance for budget approval, onward to legal for contract vetting, and finally to the executive office for sign-off.

The process could take weeks, even months, with clerks serving as the human conveyors of progress. Anecdotes from large corporations of that era describe “file runners”—junior employees whose primary job was to hand-carry documents across buildings or even across town, ensuring the workflow didn’t stall. In government offices, a file’s journey could be tracked by the colorful notations and stamps on its cover, often referred to jokingly as the "life story of the document." In essence, the file was the organizing principle, defining not only the flow of information but also the rhythm of work itself.

It wasn’t until the arrival of computers, databases, and electronic communication in the 1960s and beyond that this paper-bound, sequential method of organizing began to give way to more dynamic, parallel, and integrated systems.

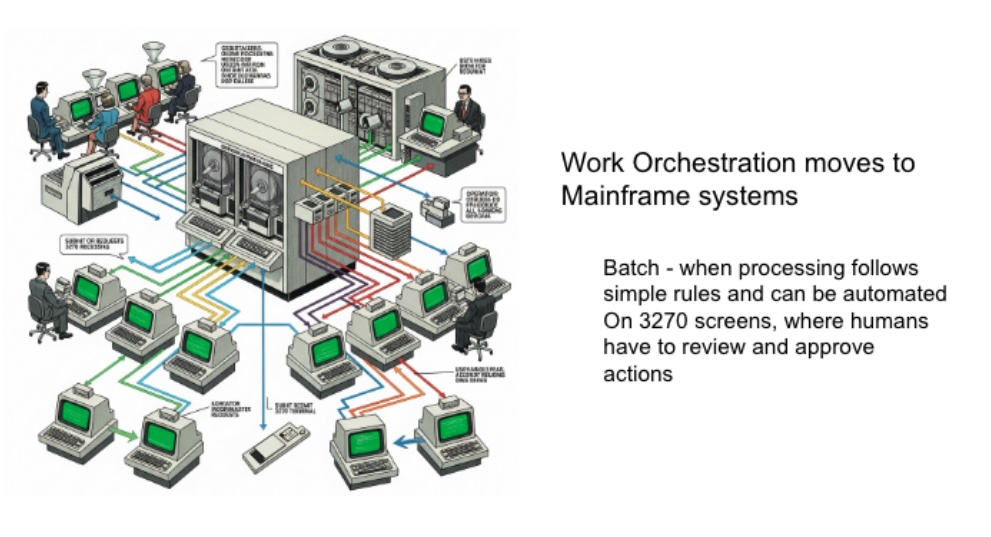

When computers first entered the corporate world in the 1960s and 1970s, large organizations established dedicated IT departments to manage these massive mainframe systems. These weren’t the nimble laptops or cloud servers we know today - they were room-sized machines that required specialized environments, raised floors, and entire teams of operators to keep them running. The computers processed business requests—such as payroll, billing, or inventory management - and generated outputs that employees and customers relied on.

This era also gave rise to the dominance of hardware giants like IBM, whose System/360 line (launched in 1964) became the backbone of many Fortune 500 companies, and Digital Equipment Corporation (DEC) with its PDP and later VAX minicomputers, which democratized computing by making it more accessible to departments rather than just centralized corporate IT.

Information processing initially relied on punch cards, where each card represented a set of instructions or data. Anecdotes from that time highlight how employees would carry stacks of punch cards - sometimes hundreds at a time - to the computer center, only to discover that a single misplaced hole or missing card meant rerunning the entire batch. Over time, technology advanced from punch cards to magnetic tapes and then magnetic disks, which enabled faster and more flexible data storage. With each innovation, machines shrank in physical size but grew exponentially in processing power, setting the stage for the personal computer revolution of the 1980s.

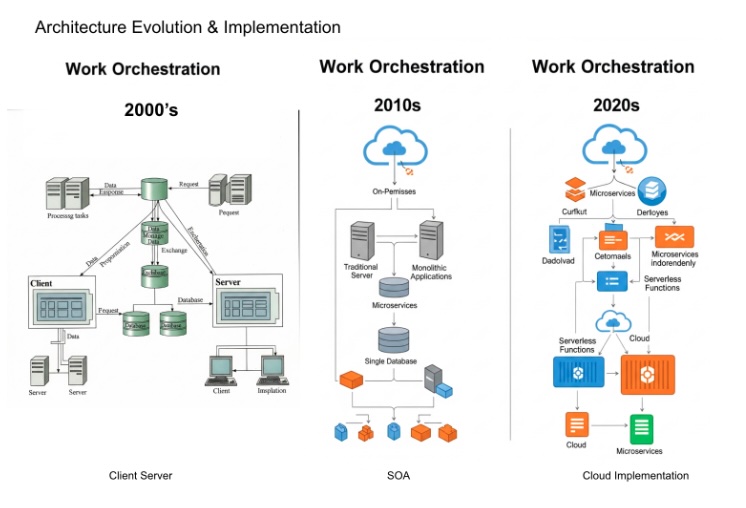

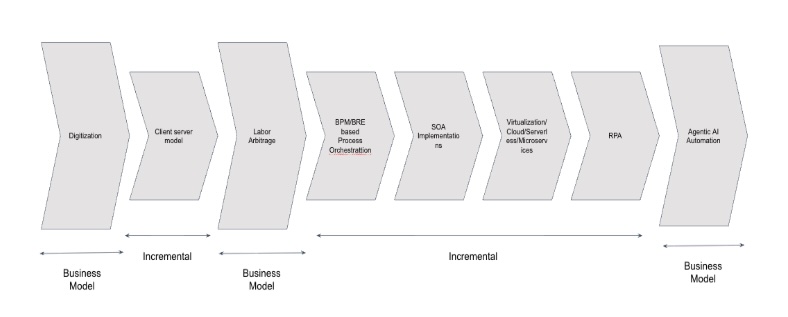

The 1980s marked a fundamental shift in computing architecture - the move from centralized monolithic mainframes to distributed systems built on the client-server model. Until then, most organizations relied on large, expensive mainframes that tightly controlled both data and applications. These systems were powerful but also inflexible, costly to maintain, and largely the domain of specialists.

The advent of affordable personal computers (PCs) and local area networking technologies democratized computing power. Companies like Microsoft, Dell, and Compaq were the young challengers of the decade, introducing cost-effective machines that could sit on every employee’s desk. Microsoft, with its MS-DOS and later Windows operating system, became the standard software layer, while Dell and Compaq revolutionized hardware manufacturing and distribution by building modular, lower-cost machines compared to IBM’s proprietary systems.

This client-server architecture allowed businesses to distribute workloads: data could be stored and processed on centralized servers, while applications and interfaces ran on networked client PCs. For example, corporate email systems such as Lotus Notes or early Microsoft Exchange leveraged this model, enabling collaboration in ways mainframes never could. Financial institutions adopted client-server trading systems that replaced slower, batch-oriented mainframe processes with near real-time applications, a leap that transformed Wall Street in the 1980s and 1990s. This shift also eroded the monopoly of companies like IBM, whose dominance in mainframes was challenged by a new wave of competitors. Apple, with the introduction of the Macintosh in 1984, showcased a user-friendly graphical interface that emphasized accessibility, while Compaq became the first company to successfully reverse-engineer IBM’s PC BIOS—ushering in the era of “IBM-compatible” PCs, which ultimately undercut IBM’s market control.

By the end of the 1980s, distributed client-server computing had become the organizing principle for corporate IT, laying the groundwork for internet-based systems and cloud architectures in later decades. This was the era when nimble, affordable, and networked machines replaced the centralized, monolithic model - and when companies like Microsoft and Apple transformed from upstarts into industry-defining behemoths.

Another transformative trend that accelerated in the 1990s was labour arbitrage, enabled by advances in networking and globalization. As corporations adopted distributed computing and became comfortable moving data and processes across networks, they realized that work no longer needed to be performed in the same geography as the customer. This gave rise to the practice of “outsourcing” and later “offshoring”, where non-core but essential functions - such as customer service, IT support, and back-office operations - were moved to lower-cost locations with a large, skilled workforce.

This shift was the foundation of the modern Business Process Outsourcing (BPO) sector. India, with its large English-speaking talent pool and improving telecom infrastructure, quickly became the global hub. Companies like Tata Consultancy Services (TCS), Infosys, and Wipro transitioned from being primarily IT services vendors into major outsourcing players, building large delivery centers in Bangalore, Hyderabad, and Pune.

Even multinational corporations embraced this model. General Electric (GE), under Jack Welch’s leadership, was an early pioneer in leveraging Indian talent for back-office and process work. In 1997, GE established what would later become Genpact, one of the first large-scale captive BPOs, which eventually spun off into a standalone global outsourcing powerhouse. Similarly, firms like Accenture and IBM Global Services expanded aggressively into offshore service delivery, blending global consulting with scalable, cost-effective labour models.

The BPO wave fundamentally changed the cost structures of large corporations. For example, banks shifted call centers and transaction processing to India and the Philippines, reducing costs while scaling operations. Insurance firms offshored claims processing. Tech companies outsourced help desks and infrastructure support. By the late 1990s and early 2000s, labour arbitrage was no longer just a cost-cutting measure but a strategic advantage, fueling the rise of a multi-billion-dollar global services industry.

As the sector matured, however, global clients began demanding more than just cost savings- they wanted expertise and value creation. This evolution led to the rise of Knowledge Process Outsourcing (KPO) in the 2000s. Unlike BPO, which focused on standardized processes, KPO involved specialized, high-value work such as equity research, legal document review, medical transcription, and advanced analytics.

For instance, firms like Evalueserve and Copal Partners (later acquired by Moody’s) offered financial research and analytics services to global banks. Infosys and Wipro began offering business intelligence and data analytics solutions. Law firms experimented with legal process outsourcing to India for document discovery. Even healthcare organizations leveraged offshore teams for medical coding and clinical trial data analysis.

This move up the value chain demonstrated how labour arbitrage had evolved - from handling simple, repetitive tasks to providing strategic insights and domain expertise. It also reshaped global perceptions of emerging market talent. India, the Philippines, and later Eastern Europe were no longer seen just as back-office hubs, but as centers of innovation, analytics, and domain knowledge.

As we entered the 2010s, the outsourcing industry faced both new pressures and new opportunities. Rising wages in India and other offshore markets began to erode the simple labour arbitrage advantage, while global clients increasingly demanded faster turnaround, deeper insights, and innovation - not just cost savings. At the same time, advances in automation, data analytics, and artificial intelligence opened up the possibility of reshaping outsourcing itself.

This marked the beginning of what some call “KPO 2.0” - a convergence of knowledge work and digital technologies. Instead of relying solely on large teams of skilled labour, companies began combining human expertise with automation platforms to deliver higher productivity, lower costs, and deeper insights.

For example:

Even traditional BPO tasks like call centers underwent a transformation. With natural language processing (NLP) and chatbots, many routine customer queries are now handled automatically, while human agents focus on complex or high-empathy interactions. In financial services, back-office reconciliation once done by armies of clerks is increasingly automated, with human oversight limited to exceptions. In healthcare, AI helps parse clinical trial data or assist radiologists, offloading repetitive work to algorithms.

This blending of automation + human expertise is reshaping the very nature of outsourcing. Instead of a cost-arbitrage model, global service providers are now selling outcome-based solutions - reducing fraud in banking, increasing customer retention in telecom, and accelerating drug development in pharma. Labour arbitrage is no longer the core story; rather, the narrative is about scaling expertise through digital platforms.

Interestingly, this shift also mirrors earlier disruptions. Just as client-server PCs in the 1980s challenged mainframes, today’s AI-driven distributed services are challenging the legacy outsourcing model. The winners are no longer just the lowest-cost providers, but those who can integrate technology, talent, and domain knowledge to deliver measurable business value.

Looking forward, we are entering an era where the next wave of arbitrage is not geographic but cognitive - companies will arbitrage between human expertise and machine intelligence, seamlessly orchestrating the two across global delivery models.

One of the hardest challenges in enterprise software through the 1990s was embedding workflow logic directly into application code. While this gave tight integration, it made systems brittle - every small process change required new code, testing across countless edge cases, and costly deployments. For industries like banking, insurance, and telecom - where compliance rules and customer processes evolved constantly - this rigidity became a major bottleneck.

To solve this, Business Process Management Systems (BPMS) emerged. These tools separated process logic from core code, representing workflows graphically so that business analysts, not just programmers, could model and adapt them. Similarly, Business Rules Engines (BREs) let firms externalize rules (e.g., “loan applicants under 25 need a guarantor”) from core applications, so rules could be updated without rewriting software.

These innovations marked a turning point: organizations began decoupling “the what” (business logic and rules) from “the how” (infrastructure and application code) - foundational step toward today’s agile enterprises.

The shift from mainframes to client-server changed enterprise IT economics. Suddenly, companies could run core applications on commodity hardware from Dell or Compaq, instead of multimillion-dollar IBM mainframes. For example, Walmart built its inventory systems on client-server architecture, which allowed it to process transactions more cheaply and scale globally. Databases like Oracle and SQL Server became household names in IT departments, powering everything from payroll to e-commerce.

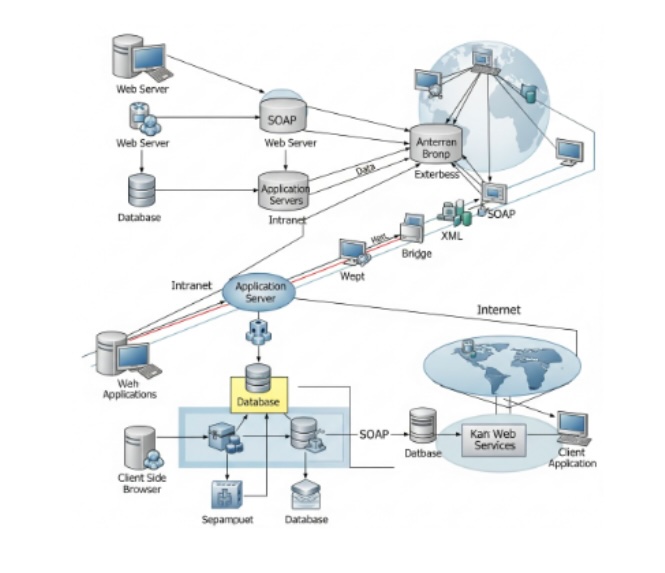

Service-Oriented Architecture (SOA, 2000s):

As businesses grew more complex, SOA emerged to modularize applications into independent services connected by standardized interfaces. This enabled upgrades, scaling, and vendor flexibility. A bank could, for example, swap its payment gateway service without rewriting its customer onboarding system.

Cloud computing changed the game by offering elastic infrastructure on demand. Amazon Web Services (AWS), launched in 2006, became the backbone of startups and enterprises alike.

These transitions—from client-server, to SOA, to cloud-native - represent the unbundling of the monolith. Each era expanded modularity, scalability, and resilience, making enterprise IT more adaptable and business-aligned.

Client server architecture made it easier and cheaper for companies to operate on commodity and cheaper hardware. It also made replacing/upgrading of parts of the system easier.

Moving to SOA, decoupled a lot of these components and thus made the replace and swap of components even easier. This allowed a lot of the components to evolve on their own path/timeline like application servers, databases, dataware houses, web servers etc. Each of these specialised to create their own champions for e.g. Oracle for RDBS, a number of players for NoSQL, Weblogic and Websphere lines for app servers etc.

Finally moving to the cloud, serverless architectures, microservices further enabled dynamic scaling, redundancy, and availability of these applications. This transformation resulted in the emergence of large cloud providers like AWS, GCP, Azure and OCI.

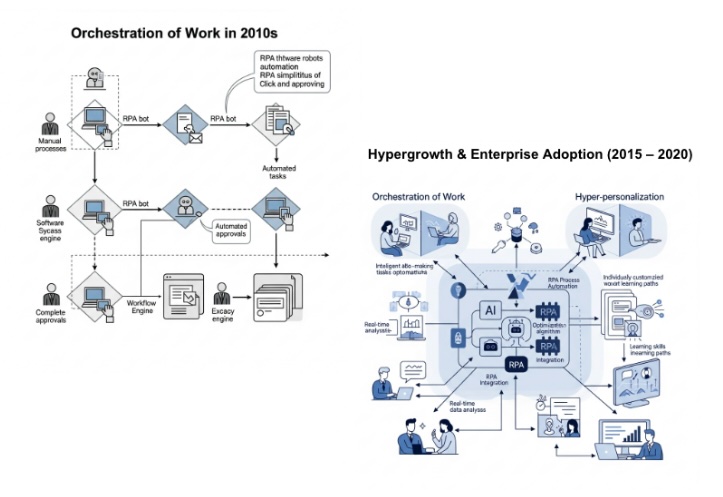

Before RPA became a term, companies were already automating tasks - but in primitive ways:

Example: Banks used screen scrapers to extract data from mainframe terminals into spreadsheets for risk reports, saving analysts hours of manual data entry.

Around the mid-2000s, the term Robotic Process Automation (RPA) began gaining traction. Unlike workflow automation, which required system integration, RPA focused on mimicking human actions at the UI level - logging into apps, moving files, filling forms, generating reports.

Example: A telecom provider automated SIM card activation through RPA bots that logged into multiple legacy systems, cutting provisioning time from 48 hours to a few minutes.

RPA became a mainstream enterprise strategy as companies looked for efficiency, especially in financial services, insurance, and healthcare.

Example: GE Capital and Deutsche Bank automated thousands of finance and compliance tasks - like reconciliations and regulatory reporting - reducing error rates while freeing employees for higher-value work.

Pure RPA had limits: it worked best for structured, rules-based tasks. To handle unstructured data (emails, PDFs, documents, images), RPA converged with AI, creating Intelligent Automation.

Example:

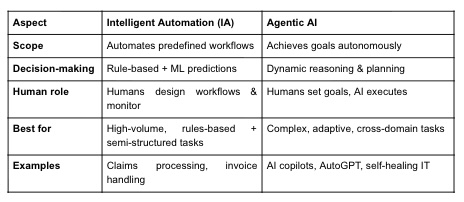

Intelligent Automation is the fusion of Robotic Process Automation (RPA) with Artificial Intelligence (AI) technologies (e.g., NLP, OCR, ML, computer vision) to handle end-to-end business processes, including those involving unstructured data, judgment, and learning.

Whereas RPA automates structured, rules-based, repetitive tasks, Intelligent Automation extends this to more complex processes by adding intelligence.

Key Components:

Examples:

Business Value:

Agentic AI refers to AI systems that act as autonomous agents, capable of planning, reasoning, and executing tasks proactively - often without human intervention. Unlike IA, which is still orchestrated by predefined workflows, Agentic AI can decide what to do next to achieve a goal.

This is powered by Large Language Models (LLMs) and multi-agent systems where AI agents can:

Key Characteristics:

Examples:

Business Value:

A key catalyst driving the adoption of Agentic AI is its ability to deliver transformation through operational expenditure (Opex) rather than capital expenditure (Capex). Traditionally, large-scale transformation initiatives - whether in technology, process re-engineering, or automation - required heavy upfront Capex investments in infrastructure, platforms, and long multi-year programs. This often limited adoption to organizations with significant budgets and long investment horizons.

With Agentic AI, however, transformation can be achieved in a pay-as-you-go model leveraging Opex budgets. AI agents can be deployed rapidly via cloud-based platforms, consumed as services, and scaled up or down based on demand. Crucially, once transformation is in place, operational costs are significantly reduced - for example, through automated customer support, streamlined finance operations, or intelligent supply chain orchestration. This means that the cost savings begin to fund the transformation itself, reducing the need for large upfront commitments.

This shift represents more than just a funding model - it signals a business model transformation. By removing the Capex barrier, organizations of all sizes can now experiment, adopt, and scale Agentic AI in a way that was previously only available to large enterprises. Just as cloud computing democratized access to advanced IT infrastructure without requiring servers and data centers, Agentic AI is democratizing access to enterprise-grade automation and intelligence through Opex-based consumption models.

In short, Agentic AI is not just reshaping workflows; it is redefining how transformation is financed and delivered, creating a structural shift in the way businesses approach technology adoption.

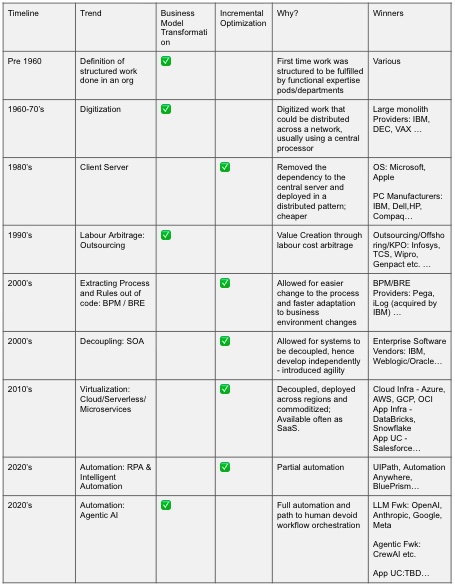

Summarizing the trends in the table below, we classify each of these as a business model innovation or an incremental change.

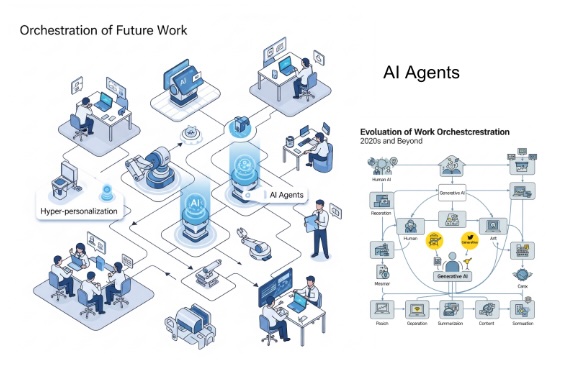

We’re moving toward autonomous operations, where bots/agents don’t just execute pre-defined scripts but:

In this vision, we aren’t just talking about “bots doing tasks” but about building a self-healing, AI-driven digital workforce that augments humans in decision-making.

While many organizations today are experimenting with building and deploying their own agentic AI solutions, we expect this trend to evolve rapidly. Over time, the focus will shift from fragmented, in-house experimentation toward consolidation, with a handful of specialized agentic AI companies emerging as scaled providers. These companies will offer suites of industry-specific agents - for example, financial services agents for compliance monitoring, healthcare agents for prior authorization and claims, or retail agents for supply chain orchestration - covering the majority of functional tasks within end-to-end workflows.

In the early stages, enterprises will still need to manage their own agents - handling quality control, exception management, and human-in-the-loop interventions where an agent “drops down” to a human for oversight. But as adoption matures, we anticipate a second wave of consolidation: the management of agent ecosystems itself will be outsourced. Just as cloud infrastructure moved from on-premise IT to centralized hyperscalers, the management of agent suites is likely to move to a few large agent operations providers, who can deliver economies of scale, standardized governance, and continuous improvement.

In this model, organizations will consume agents much like SaaS or cloud services today - provisioning them on-demand, integrating them into workflows, and relying on specialized providers for monitoring, compliance, and optimization. This represents not just a shift in

technology, but a restructuring of the value chain, where agent development, deployment, and management become concentrated in a few scaled players rather than dispersed across thousands of enterprises.

To understand how these trends are shaping our investment thesis and strategy, we invite you to read the next post in this series. There, we outline our perspective on the AI stack, and highlight the key strategies and assets we look for in companies - particularly those building durable moats to sustain long-term competitive advantage.