Our analytics platform uses only corporate data stores and services only internal users and use cases. We chose to move it to the cloud. Our reasoning for doing this is as follows...

The usual perception amongst most enterprises is that cloud is risky, and when you have assets outside the enterprise perimeter, they are prone to be attacked. Given the mobility trends of our user population - both from the desire to access every workload from a mobile device that can be anywhere in the world as well as the BYOD trends, it is increasingly becoming clear that peripheral protection networks may not be the best solution given the large number of exceptions that have to be built into the firewalls to allow for these disparate use cases.

Here are some of the reasons why we chose otherwise:

Let's consider the security aspect:

The cost of maintaining and patching a periphery based enterprise network is expensive and risky. One wrong firewall rule or access exception can jeopardize the entire enterprise. Consider the following:

A typical APT (Advanced Persistent Threat) has the following attack phases:

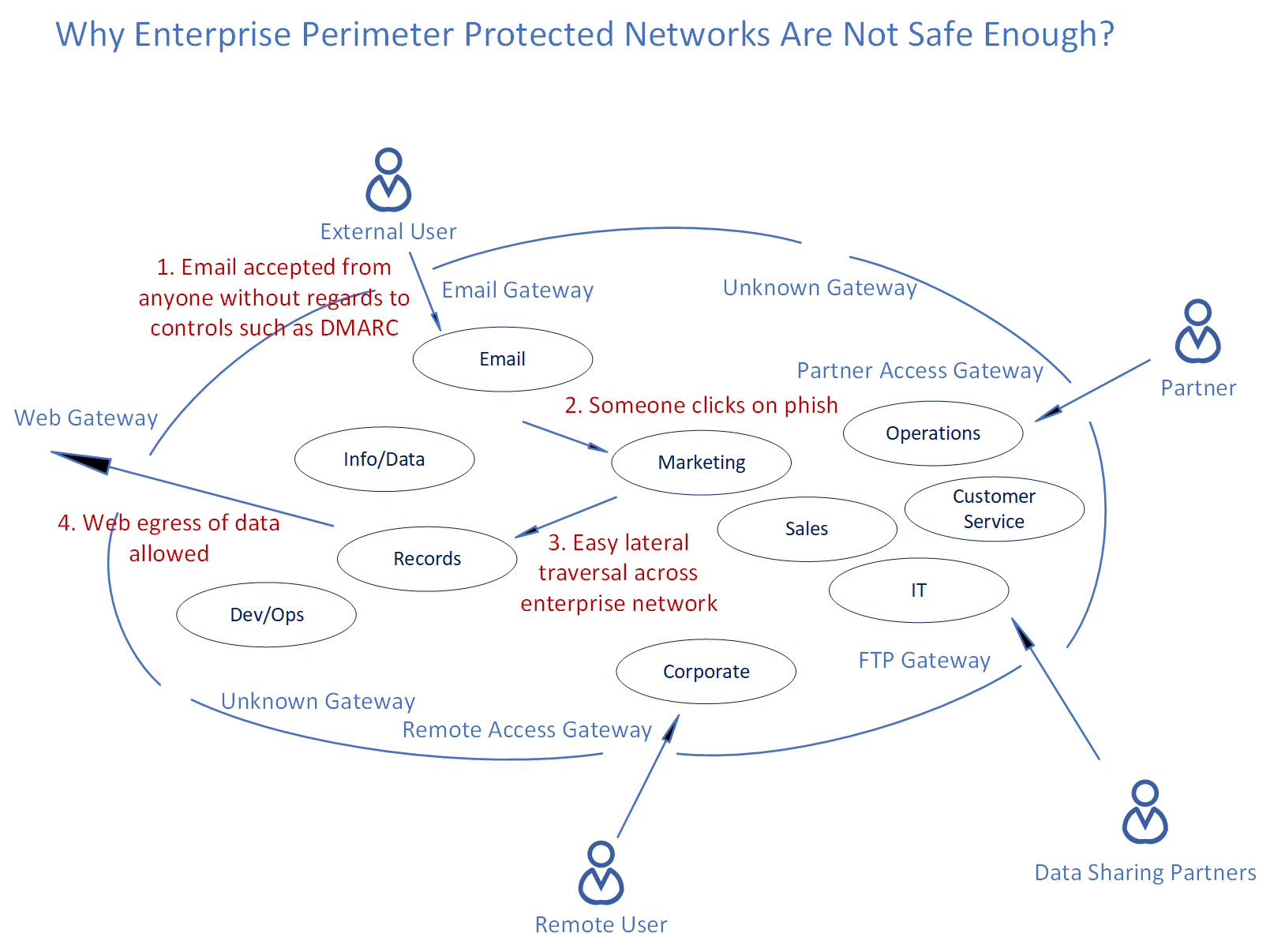

An enterprise usually allows for all incoming external email. Usually most organizations do not implement email authentication controls such as DMARC (Domain-based Message Authentication, Reporting & Conformance). You would also have IT systems that implement incoming file transfers, so some kind of an FTP gateway would be provided for. You also see third party vendors/suppliers coming in through a partner access gateway. Then you also have to account for users within the company that are remote and need to access firm resources remotely to do their job. That would mean a remote access gateway. You also do want to allow employees and consultants that are within the enterprise perim , to be able to access most websites and content outside implying you would have to provision for a web gateway. There would also be other unknown gateways that had been opened up in the past, that no one documented and now people are hesitant to remove those rules from the firewall since they do not know who is using it. Given all these holes built in to the enterprise perim, imagine how easy it is allow for web egress of confidential data, through even a simple misconfiguration of any

As you can tell from the above diagram, the following sequence could result in a perimeter breach:

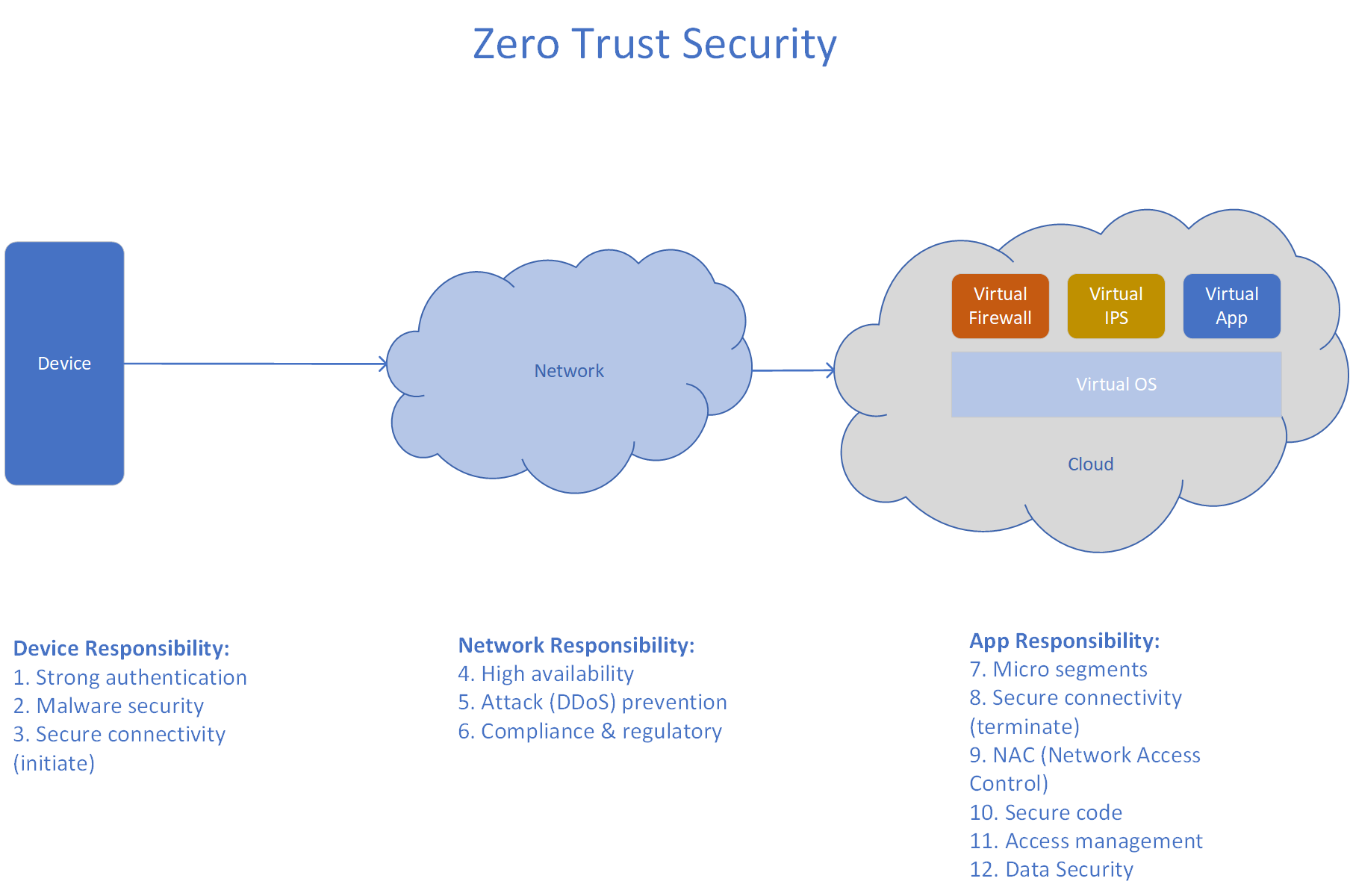

On the other hand consider a Micro-Segmented Cloud workload which provides multi layer protection through DID (defense in depth). Since the workload is very specific, the rules on the physical firewall as well as in the virtual firewall or IPS (Intrusion Protection System) are very simple and only need to account for access allowed for this workload. Additional changes in the enterprise does not result in these rules being changed. So distributed workload through micro-segmentation and isolation actually reduces risk of a misconfiguration or a data breach.

Another key aspect to consider is the distribution of nodes. Looking at botnets, they have an interesting C&C (Command and Control) design; if the C&C node is knocked out, another node can assume the C&C function and the botnet continues its work attacking. Similarly cloud workloads can also implement the same C&C structure and if one of the C&C nodes is victim to a DoS (Denial of Service) attack, another can assume its role. Unlike an enterprise perimeter, a cloud deployment does not have a single attack surface and associated single point of failure, and a successful DoS attack does not mean that all assets/capabilities are neutralized, rather other nodes take over and continue to function seamlessly providing high availability.